Data Engineering become a critical part in the past few years in almost any organization that use data heavily in their system, and I am sure you heard a lot about the comparison between data engineers and data scientist and which is better but actually, there is no role is better than another role, each role has its own path of learning, required skills, and technologies, of course, there is common ground between both in skills and technologies used.

Data Engineer in simple and direct words is responsible to design, implement, and maintain data pipelines with different types of data processing such as batch, or streaming processing for the organization.

According to Glassdoor average salary for a data engineer in the United States is around 102K as of today.

In this article we will not do a comparison between a data engineer and data science, instead, we will go through all required skills, technologies, and concepts you need to master to become a professional data engineer, and we will try to link to references whenever possible for each topic.

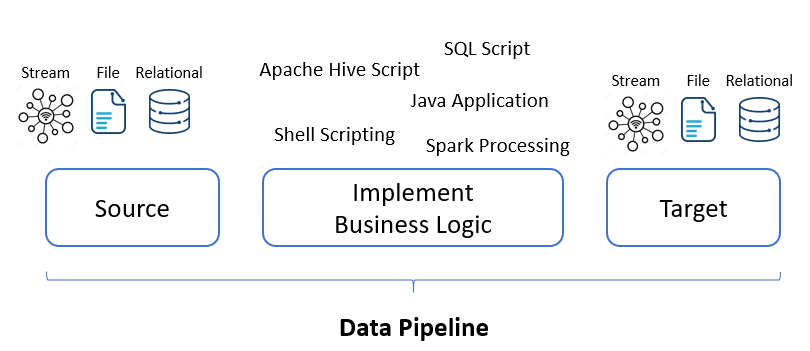

As we mentioned data engineer is responsible for the design, implementation, and maintenance of data pipelines and any data pipelines consist of three main components, source of data, business logic to be applied, and target system to load data to it.

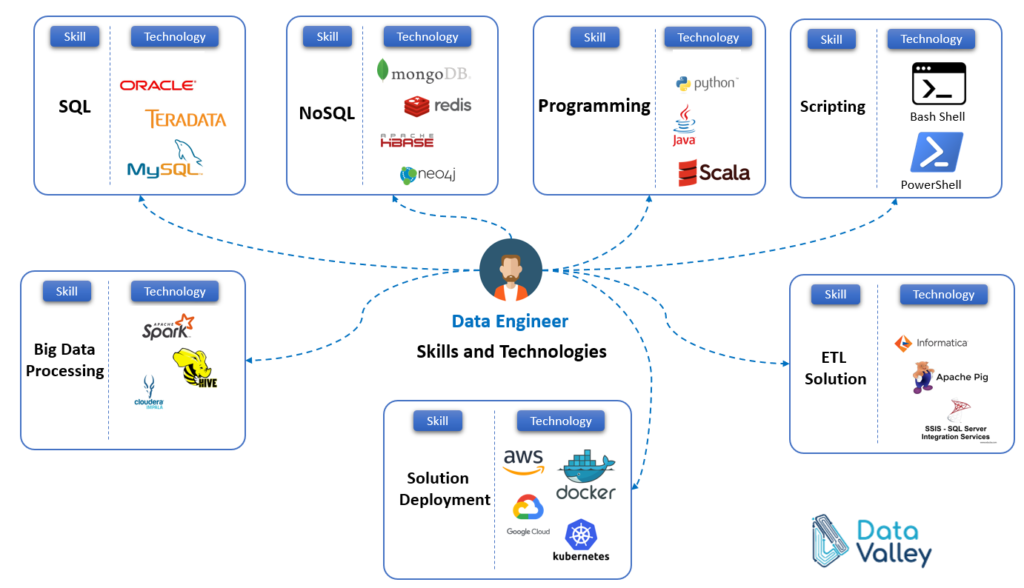

Let’s now move to skills and technologies that any data engineer will interact with, not necessarily all mentioned technologies you will need to know it totally depends on existing data sources in your organization, but we tried to mention the most popular examples for each.

Skills and Technologies

SQL Scripting

One of the famous sources of data any data engineer will interact with is relational database systems, almost in any organization, you will have a source or two of relational databases. data engineers should have the skill to query out data or manipulate data in RDBMS using the standard SQL. SQL is a standard language in all RDBMS vendors will support the standard syntax of SQL, however, each RDBMS vendor will have some specific functions tailored specifically to its solution, and this kind of specific functions or specific syntax you need also to be aware of, as using some of this functions will impact the performance of your queries. Also, you need to understand the architecture of existing RDBMS as understanding this will help you choose the correct structure for your tables, primary keys, and partitioning technique for your tables.

Summary

- Understand standard SQL

- Extract and manipulate data using SQL

- Understand RDBMS architecture, for example, Teradata stored data in distributed storage, which needs a careful choice of Primary Key

- Understand RDBMS vendor special functions and special syntax

Technologies

NoSQL Scripting

Another type of database is NoSQL which stores data in a different way other than the tabular format used by RDBMS. We have different types of NoSQL databases, and each type of data has its own special cases, so it is important to understand the use case and structure of your data to understand what is the best solution fit your data and your application. In NoSQL, we have a Columnar database that stores data in a separate storage file for each column in your table, and this best fit for analytics workload, Key/Value database, which is best fit for use cases where need fast access to data is required a famous use case for this is storing website user session data, Graph database which stores data as nodes and store also relations between nodes the best use case for such database is Fraud analysis and social network analysis, Document database which stores data a JSON object, this kind of database is best fit for applications that needs dynamic data access or flexible schema

Summary

- Understand the type of data, and required processing for the use case to choose the best type of NoSQL database

- Understand the query language syntax to query each kind of database (Columnar, Graph, Key/Value, Document)

Technologies

Programming

Sometimes you will need to write a specific application tailored to your use case to solve a certain data problem, to handle a specific data processing requirement, or to read data from APIs, you have multiple options to use based on your needs and based on the source of data you need to retrieve data from, for example, if the client you will use to retrieve data supports only specific APIs such as Python, Java, or Scala

Summary

- Select programming language for your task based on API, use case, or source of data client you need to connect to

Technologies

Operating System Scripting

Operating system scripting such as Shell scripting or Powershell scripting in some cases will be used to do operational or orchestration jobs

Technologies

Big Data Processing

Currently, with the big movement for most organizations towards big data technologies adoption, processing data from in a big data environment is considered a mandatory task for all data engineers, choosing the best technology for your task depends totally on your data format and required data processing for your use case, for example, Apache Spark can process data in batch mode and streaming mode with support to graph processing and machine learning models implementation, on the other hand, Apache Hive support batch processing of data using HiveQL which is pretty similar to standard SQL

Summary

- To choose the best big data processing solution, understand very well the structure of data in your use case, and type of data processing needed

Technologies

ETL Implementation

As a Data Engineer ETL (Extract Transform Load) is a mandatory concept to know, you have a variety of options that will help you implement this concept in your project such as Informatica PowerCenter which is a leading solution in the ETL field, you can also implement ETL concept using Apache Spark, or Apache Pig scripting, plus many other solutions available. To know how to choose the best ETL solution for your project check our previous article on the criteria that will help you select the best fit for your case

Technologies

Application Deployment

To deploy your work you have three options, either on-premise, on the cloud, or on Dockers, that’s why it is important for you as a data engineer to be aware of the available solutions on the cloud and also to be aware of docker technologies and how to deploy your application on dockers.

Technologies

Nice to Have

There are other skills that are not mandatory for a data engineer but it will be nice to have added to the skill-set of the data engineer, such as data visualization which will help data engineers to profile data and visualize data after enriching and processing

Concepts

Data Warehouse

Data Warehouse is one of the most important components of any organization, as Data Warehouse serves as the single point of truth for the organization and the main, and center source of organization KPIs. In the Big Data era still, Data Warehouse has significant importance in any organization architecture, as it is the interface layer for organization business users, which they use to access organization KPIs.

What to know

- Data Warehouse Components

- Data Warehouse Design process

- Data Warehouse Types of tables

- Dimension types

- Facts Types

- Slowly Changing Dimension Types

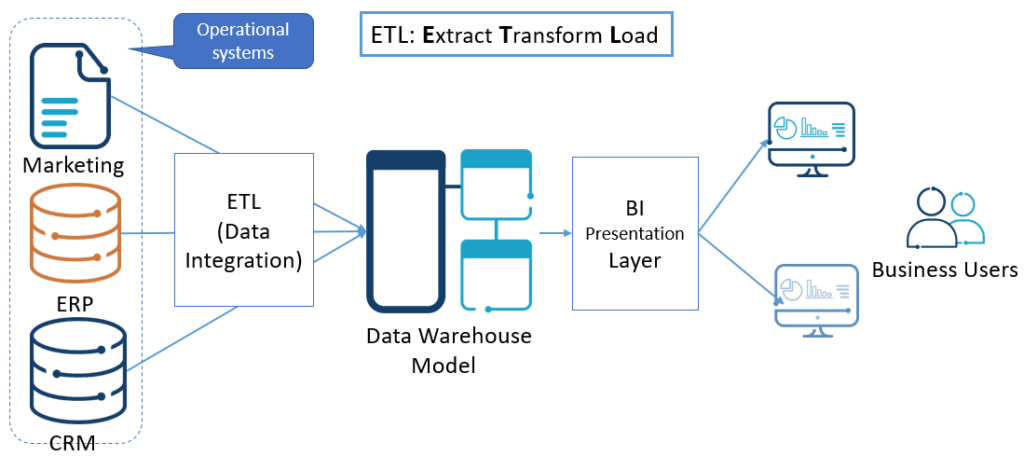

ETL (Extract Transform Load)

ETL is a general concept that represents three phases of data processing, Extract represents the extraction of data from the source system regardless of its type (Database, file,…etc..). Transform represents all kinds of transformations you apply on the data extracted from the source to filter, enrich data, restructure data format, merge with other datasets, and many other transformations can be applied to source data. The Load represents the final stage of the process by loading the transformed data to the target system.

ETL can be implemented with many solutions or technologies as we mentioned earlier in this article